Data pipeline management is critical for companies that rely on big data to make informed decisions. However, this process can take time and effort.

With the help of Apache Airflow, an open-source platform for managing data pipelines, you can make the process easy and efficient.

This guide describes Apache Airflow’s key features, functionalities, pros, and cons. So you can determine whether or not this could be a beneficial choice when looking into automating your processes. Read out the post to learn more about it.

Latest release: Apache Airflow 2.8.3

Date: March 11, 2024

What is Apache Airflow?

It is an open-source platform for complex data analytics and monitoring data pipelines. It has various features, such as support for multiple languages, robust scheduling capabilities, and the ability to monitor your data pipelines in real-time.

In addition, it allows data professionals to author, schedule, and monitor workflows using Python. Apache Airflow also has a large community of users and contributors, so you can find help when needed.

It can control parallel processes easily, stream data flow, automated scheduling, user management, and fault tolerance.

Community

Apache Airflow started with a community of 500 active members, such as committers, maintainers, and contributors. It started at Airbnb as an open-source platform. The community members support the open-source platform and help each other to solve problems.

How is Airflow used?

Airflow is used to create and manage data pipelines that crisscross on-premise and cloud environments. It provides organizations single source of truth to manage and monitor workflows.

Principles

- Scalable – Airflow is Python-based and has an adaptable and flexible architecture that allows Python users to write their hooks, sensors, and custom operators. As a result, Airflow is suitable for all organizations, from a few users to thousands of users.

- Dynamic – Airflow allows dynamic pipeline generation. With Python, users can write code that discovers pipelines dynamically.

- Extensible – Airflow allows you to extend your libraries and make them suitable for your environment.

- Elegant – Airflow used a Jinja templating engine to build parametrization; its pipelines are lean & explicit.

Key Features

- Python-based – Airflow uses standard Python libraries to create workflows. Hence it will be easy to schedule and build workflows and generate tasks.

- Open-source – Airflow is an open-source platform supported by a community of developers and active members.

- Useful user interface – It provides modern web applications to schedule, manage, and monitor workflows. It allows end-users to view the real-time status of tasks and ongoing tasks.

- Integration – Airflow provides third-party integration that helps to execute your work on platforms like Google Cloud Platform, Amazon Web Services, and Microsoft Azure.

- Easy to use – If you have Python knowledge, you can easily deploy your workflow. You can also transfer data, manage infrastructure, and build ML models easily.

Pricing

Airflow is an open-source platform, and no need to pay any charges.

Functionalities

Data scheduling

You can also create and manage datasets and chain datasets. They update automatically.

Deferrable operators

You can put up long-running tasks with the help of deferrable operators.

Active task mapping

You can Instantly start as many parallel tasks as necessary in reaction to the results of prior tasks. Link dynamic assignments together to hasten and simplify ETL and ELT handling.

Event-driven workflows

You can use dynamic tasks, data-oriented scheduling, and deferrable operators to create powerful event-driven workflows that will run without any manual intervention.

Task flow API

No need to write complicated codes to move data between tasks. You can use API abstractions for that.

Full rest API

You can use Airflow’s REST API to construct programmatic services.

Good scheduler

You can run many data pipelines simultaneously, allowing hundreds or thousands of tasks to be completed in parallel with hardly any lag time.

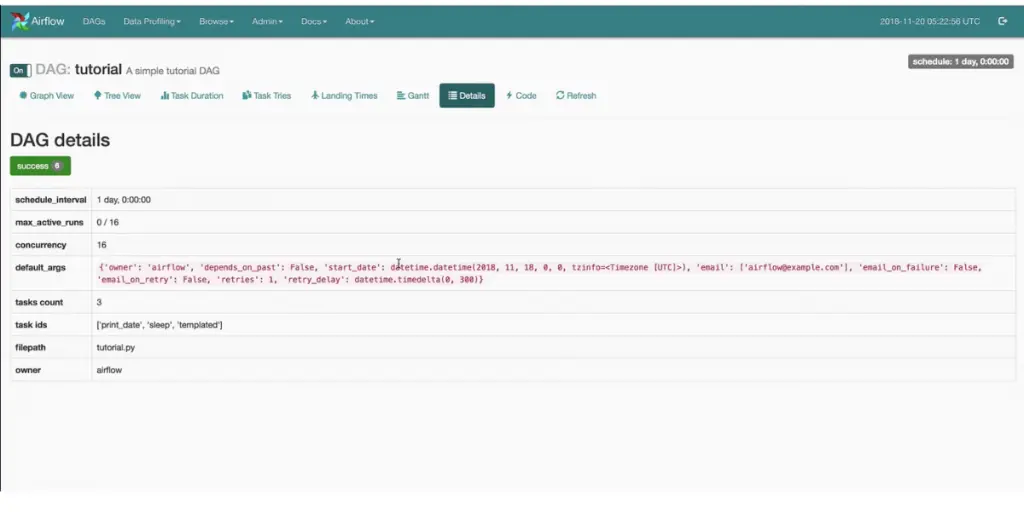

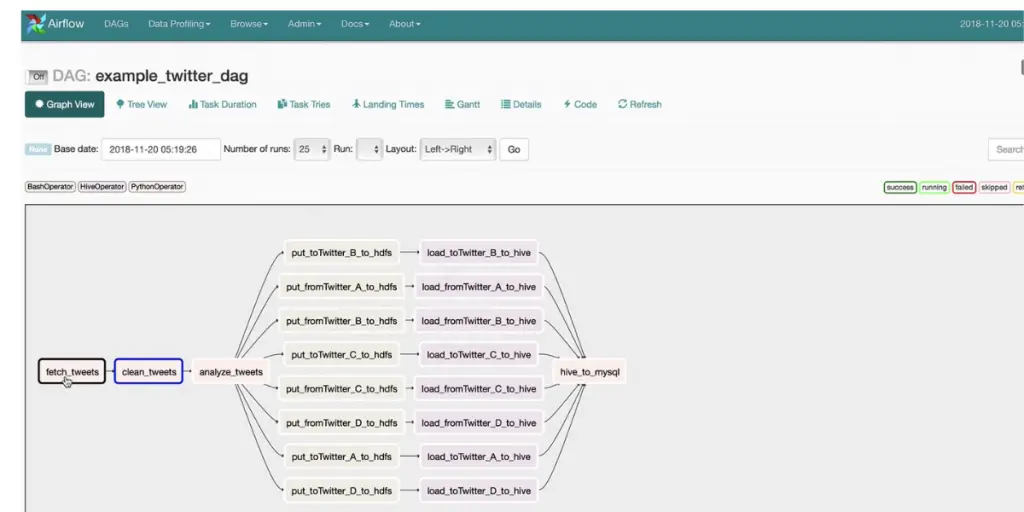

Some screenshots of Airflow

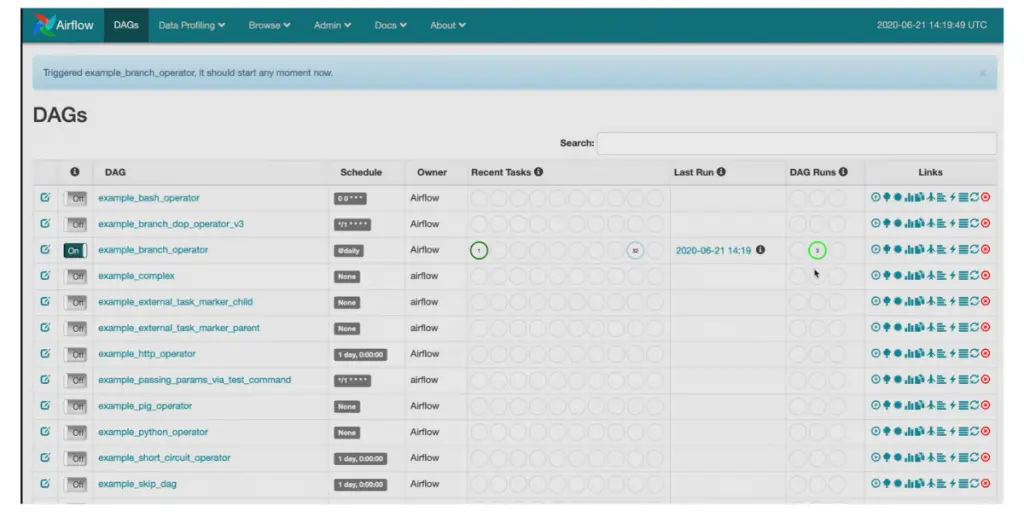

Dags page view

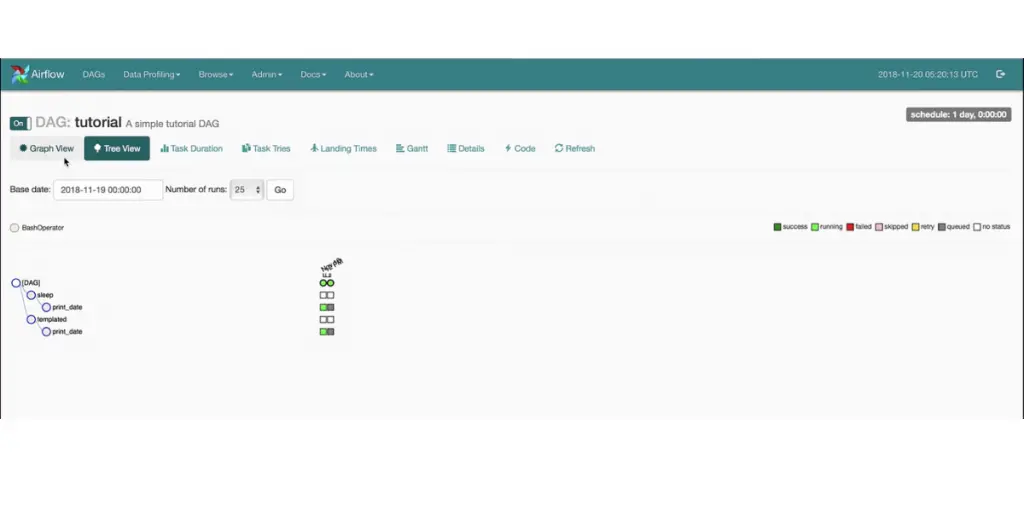

Graph view

Pros

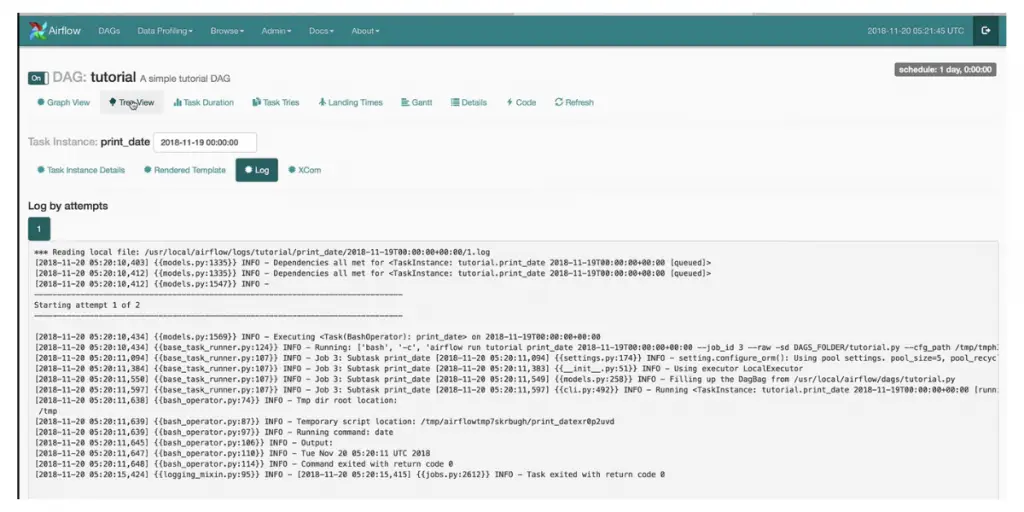

- You can easily view the status and failure of each step in the workflow.

- Good control over workflow.

- It is easy to learn because of the Python framework.

- Scheduling is very easy.

- You track one year back history and logs.

Cons

- The user interface can be more customizable.

- Less simultaneousness with a small system.

- Less security

- The default settings of tasks have a rather considerable latency rate. More effort and time are required to reduce it.

- It takes more time to start the dag/pod.

- It does not support auto-scaling if you have less memory in the cluster.

- Sometimes, the flow file will get corrupted.

- Debugging will take more time.

- It isn’t easy to check the quality of your data.

- It is an orchestrator and irregular with higher run time jobs

- It requires technical knowledge to use this tool more efficiently.

FAQs

What problem does Apache Airflow solve?

Apache Airflow helps manage data pipelines. It helps decide the order of different steps, when they need to be done, and how to keep track of them all. Data pipelines are many tasks that work together to get data from different places.

What is the maximum DAG(Directed Acyclic Graph) size in Airflow?

Airflow doesn’t have a maximum DAGS size that it can run. It depends on how many resources (computers, processing power, and memory) are available. If enough resources are available, you can change the settings to make it run longer.

What is the difference between Airflow and Workflow?

Airflow is a tool that helps you organize workflows into a sequence. A workflow is like a map with arrows showing which tasks come first, second, and so on. Tasks are like pieces of work, and the arrows show how they are connected and what data they use.

Conclusion

Apache Airflow is invaluable for streamlining workflows while boosting flexibility and scalability. It’s used to manage tasks with different lifecycles and monitor data pipelines making it highly efficient when undertaking ETL processes.

Despite having a steep learning curve, it provides access to data science professionals. We’ve covered Apache Airflow’s features, functionalities, pros, and cons. I hope it is useful for you.

Reference